Researchers have developed a so-called HoloRadar that allows robots to look around corners using radio waves. An AI model evaluates the data.

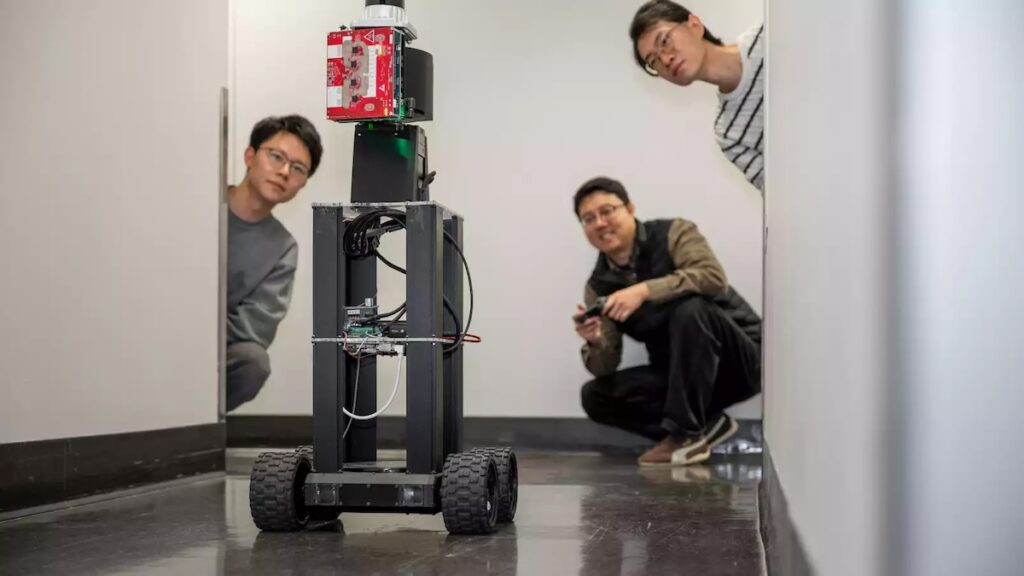

A team of researchers at the University of Pennsylvania has developed a system that allows mobile robots to detect objects before they enter their direct field of vision. The technology uses a radar module and specially designed artificial intelligence to detect obstacles around corners.

For example, the robot identified pedestrians in a winding hallway. The researchers describe their results in a publication of the Annual Conference on Neural Information Processing Systems. Your robot actively scans its surroundings by sending out high-frequency radio signals via the radar module.

These signals hit flat surfaces such as walls, floors or ceilings and are reflected back from them. Some of the waves also propagate into areas outside the primary field of vision and bounce off there. By receiving these echoes, the system receives information about objects that there is no direct eye contact with.

Robot sees around corners with HoloRadar: AI mind for radio waves

However, when it comes to practical implementation, conventional signal processing reaches its technical limits. Individual radio pulses are deflected several times on their way through winding rooms. The robot’s receiver is therefore in the middle of a tangle of different radio waves bouncing back. This layering of signals makes it impossible for standard systems to cleanly disentangle the information.

The researchers solved this difficulty with tailor-made artificial intelligence. The software combines classic machine learning with in-depth knowledge of physical laws. The system processes data in two consecutive steps. First, the AI helps the robot identify those signals that have returned via very specific mirroring paths.

Through this comparison, the software understands which detours the waves have taken in space. In a second step, the system uses the stored physical model again. It uses it to calculate the exact origin of the previously identified deflected signals. In this way, the robot determines the position of obstacles behind a visual barrier.

Radar sees what cameras cannot

The robot uses this information to create a three-dimensional reconstruction of its entire environment. This virtual map also depicts areas that were originally outside the field of view. In the past, experts have presented devices with similar capabilities but using visible light. Such systems evaluate shadows or indirect reflections from light sources.

A major disadvantage of light-based methods is their strong dependence on the prevailing lighting conditions. They usually require controlled lighting to provide precise data about hidden objects. Without an optimal light source, these sensors lose reliability. The University of Pennsylvania system, however, operates without such controlled lighting.

The radar waves do not require visible light to detect obstacles or people behind corners. This enables the use of mobile robots under real conditions. While optical sensors can fail in poorly lit environments, the radar system continuously scans the structure of the room. The technology thus offers a solution for navigation in winding building structures.

By combining physical models and adaptive algorithms, the research group achieved a changed form of perception. The machine does not interpret the signal overlay as a disturbance, but rather uses it to determine the position. This means that people are recognized at a time when they are not yet directly visible to the system. This technical approach expands the possibilities of spatial image processing.

Also interesting: